Sometime back I was reading this interview between Martin Geddes and Peter Clemons on 'The Crisis in UK Critical Communications'. If you haven't read it, I urge you to read it

here. One thing that stuck out was as follows:

LTE was not designed for critical communications.

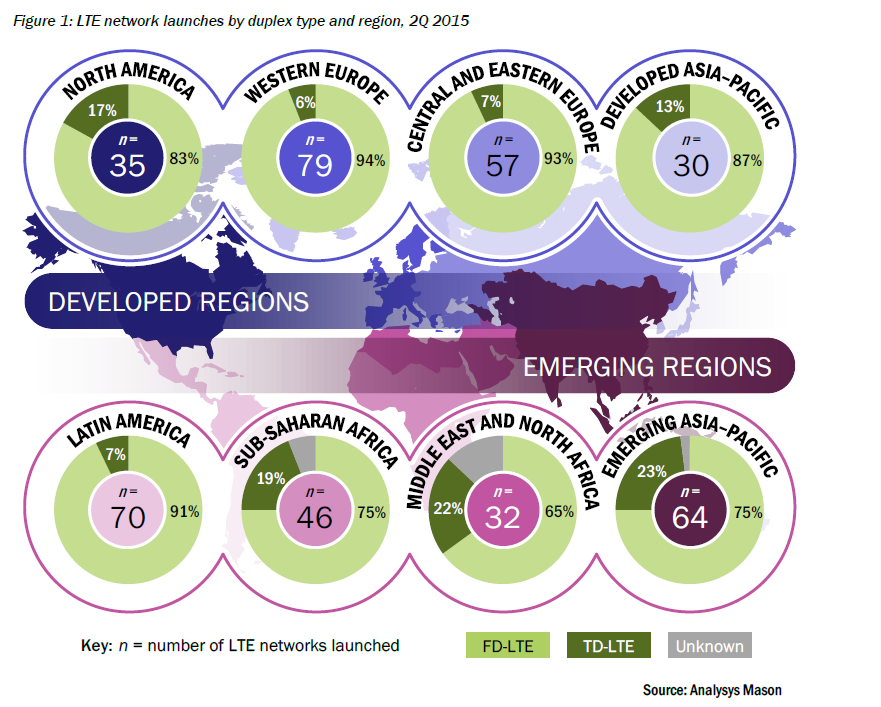

Commercial mobile operators have moved from GSM to UMTS to WCDMA networks to reflect the strong growth in demand for mobile data services. Smartphones are now used for social media and streaming video. LTE technology fulfils a need to supply cheap mass market data communications.

So LTE is a data service at heart, and reflects the consumer and enterprise market shift from being predominantly voice-centric to data-centric. In this wireless data world you can still control quality to a degree. So with OFDM-A modulation we have reduced latency. We have improved how we allocate different resource blocks to different uses.

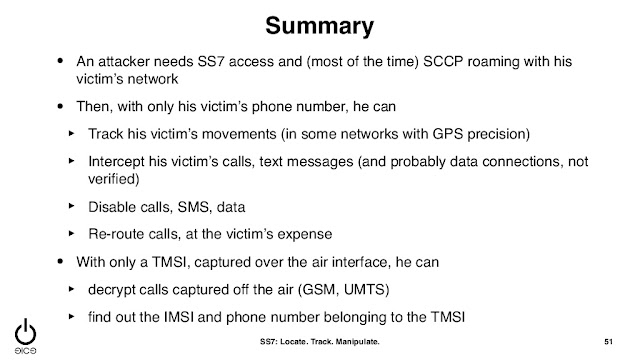

The marketing story is that we should be able to allocate dedicated resources to emergency services, so we can assure voice communications and group calling even when the network is stressed. Unfortunately, this is not the case. Even the 3GPP standards bodies and mobile operators have recognised that there are serious technology limitations.

This means they face a reputational risk in delivering a like-for-like mission-critical voice service.

Won’t this be fixed by updated standards?

These lobbied 3GPP for capabilities specifically aimed at critical communications requirements. At the Edinburgh meeting in September 2014, 3GPP set up the SA6specification group, the first new group in a decade.

The hope is that by taking the critical communications requirement into a separate stream, it will no longer hold up the mass market release 12 LTE standard. Even with six meetings a year, this SA6 process will be a long one. By the end of the second meeting it had (as might be expected) only got as far as electing the chairman.

It will take time to scope out what can be achieved, and develop the critical communications functionality. For many players in the 3GPP process this is not a priority, since they are focusing solely on mass market commercial applications.

Similar point was made in another Critical communications blog

here:

LTE has emerged as a long term possible replacement for TETRA in this age of mobile broadband and data. LTE offer unrivalled broadband capabilities for such applications as body warn video streaming, digital imaging, automatic vehicle location, computer-assisted dispatch, mobile and command centre apps, web access, enriched e-mail, mobile video surveillance apps such as facial recognition, enhanced Telemetry/remote diagnostics, GIS and many more. However, Phil Kidner, CEO of the TCCA pointed out recently that it will take many LTE releases to get us to the point where LTE can match TETRA on key features such as group working, pre-emptive services, network resilience, call set-up times and direct mode.

The result being, we are at a point where we have two technologies, one offering what end users want, and the other offering what end users need. This has altered the discussion, where now instead of looking at LTE as a replacement, we can look at LTE as a complimentary technology, used alongside TETRA to give end users the best of both worlds. Now the challenge appears to be how we can integrate TETRA and LTE to meet the needs and wants of our emergency services, and it seems that if we want to look for guidance and lessons on the possible harmony of TETRA and LTE we should look at the Middle East.

While I was researching, I came across this interesting presentation (embedded below) from the LTE World Summit 2015

The above is an interesting SWOT (Strengths, Weaknesses, Opportunities and Threats) analysis for TETRA and LTE. While I can understand that LTE is yet unproven, I agree on the lack of spectrum and appropriate bands.

I have been told in the past that its not just the technology which is an issue, TETRA has many functionalities that would need to be duplicated in LTE.

As you can see from this timeline above, while Rel-13 and Rel-14 will have some of these features, there are still other features that need to be included. Without which, safety of the critical communication workers and public could be compromised.

The complete presentation as follows. Feel free to voice your opinions via comments.