I have spent many years working in the Test and Measurement industry and have also worked as a hands on engineer testing solutions, and as a field engineer testing various solution pre and post deployment. Over the years I have used various attenuators and dummy loads. It was nice to finally look at the different types of dummy loads and understand how they work in this R&S video.

So what exactly is a dummy load? At its core, it is a special kind of termination designed to absorb radio frequency energy safely. Instead of letting signals radiate into the air, a dummy load converts the RF power into heat. Think of it as an antenna that never actually transmits anything. This makes it invaluable when testing transmitters because you can run them at full power without interfering with anyone else’s spectrum.

Ordinary terminations are widely used in test setups but they are usually only good for low power. If you need to deal with more than about a watt of power, that is where dummy loads come in. Depending on their design, they can handle anything from a few watts to many kilowatts. To survive this, dummy loads use cooling methods. The most common are dry loads with large heatsinks that shed heat into the air. For higher powers, wet loads use liquids such as water or oil to absorb and move heat away more efficiently. Some combine both air and liquid cooling to push the limits even further.

Good dummy loads are not just about heat management. They also need to provide a stable impedance match, usually 50 ohms, across a wide frequency range. This minimises reflections and ensures accurate testing. Many dummy loads cover frequencies up to several gigahertz with low standing wave ratios. Ultra broadband designs, such as the Rohde & Schwarz UBL100, go up to 18 GHz and can safely absorb power levels in the kilowatt range

Some dummy loads even add extra features. A sampling port allows you to monitor the input signal at a reduced level. Interlock protection can shut down a connected transmitter if the load gets too hot. These touches make dummy loads more versatile and safer in real-world use.

In day-to-day testing, dummy loads help not only to protect transmitters but also to get accurate measurements. By acting as a perfectly matched, non-radiating antenna, they give engineers confidence that they are measuring the true transmitter output. They can also be used to quickly check feedlines and connectors by substituting them in place of an antenna.

Rohde & Schwarz have put together a useful explainer video that covers all of this in a simple, visual way. You can watch it below to get a clear overview of dummy loads and why they matter so much in RF testing.

Related Posts:

- The 3G4G Blog: New 5G NTN Spectrum Bands in FR1 and FR2

- Free 6G Training: Non-Terrestrial Networks (NTN) & Satellites - Taking 6G to the Stars

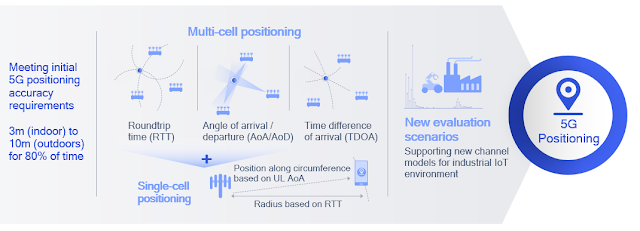

- The 3G4G Blog: Positioning Techniques for 5G NR in 3GPP Release-16

- Free 6G Training: Spectrum for 5G, Beyond 5G and 6G research

- The 3G4G Blog: Key Technology Aspects of 5G Security by Rohde & Schwarz

- The 3G4G Blog: R&S Webinar on LTE-A Pro and evolution to 5G