Since the industry realised how the 5G Network Architecture will look like, Network Slicing has been touted as the killer business case that will allow the mobile operators to generate revenue from new sources.

Last month ABI Research said in a press release:

According to global technology intelligence firm ABI Research, 5G slicing revenue is expected to grow from US$309 million in 2022 to approximately US$24 billion in 2028, at a Compound Annual Growth Rate (CAGR) of 106%.

“5G slicing adoption falls into two main categories. One, there is no connectivity available. Two, there is connectivity, but there is not sufficient capacity, coverage, performance, or security. For the former, both private and public organizations are deploying private network slices on a permanent and ad hoc basis,” highlights Don Alusha, 5G Core and Edge Networks Senior Analyst at ABI Research. The second scenario is mostly catered by private networks today, a market that ABI Research expects to grow from US$3.6 billion to US$109 billion by 2023, at a CAGR of 45.8%. Alusha continues, “A sizable part of this market can be converted to 5G slicing. But first, the industry should address challenges associated with technology and commercial models. On the latter, consumers’ and enterprises’ appetite to pay premium connectivity prices for deterministic and tailored connectivity services remains to be determined. Furthermore, there are ongoing industry discussions on whether the value that comes from 5G slicing can exceed the cost required to put together the underlying slicing ecosystem.”

I recently published IDC's first forecast on 5G network slicing services opportunity. Slicing should be an important tool for telcos to create new services, but it still remains many years away in most markets. A very complicated undertakinghttps://t.co/GNY4xiLFBV

— Daryl Schoolar (@DHSchoolar) April 29, 2022

Earlier this year, Daryl Schoolar - Research Director at IDC tackled this topic in his blog post:

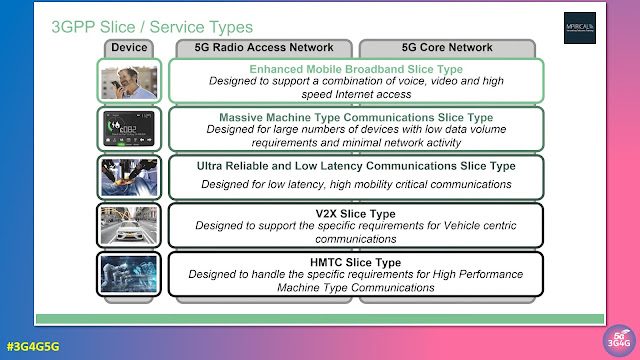

5G network slicing, part of the 3GPP standards developed for 5G, allows for the creation of multiple virtual networks across a single network infrastructure, allowing enterprises to connect with guaranteed low latency. Using principles behind software-defined network and network virtualization, slicing allows the mobile operator to provide differentiated network experience for different sets of end users. For example, one network slice could be configured to support low latency, while another slice is configured for high download speeds. Both slices would run across the same underlying network infrastructure, including base stations, transport network, and core network.

Network slicing differs from private mobile networks, in that network slicing runs on the public wide area network. Private mobile networks, even when offered by the mobile operator, use infrastructure and spectrum dedicated to the end user to isolate the customer’s traffic from other users.

5G network slicing is a perfect candidate for future business connectivity needs. Slicing provides a differentiated network experience that can better match the customers performance requirements than traditional mobile broadband. Until now, there has been limited mobile network performance customization outside of speeds. 5G network slicing is a good example of telco service offerings that meet future of connectivity requirements. However, 5G network slicing also highlights the challenges mobile operators face with transformation in their pursuit of remaining relevant.

For 5G slicing to have broad commercial availability, and to provide a variety of performance options, several things need to happen first.

- Operators need to deploy 5G Standalone (SA) using the new 5G mobile core network. Currently most operators use the 5G non-standalone (NSA) architecture that relies on the LTE mobile core. It might be the end of 2023 before the majority of commercial 5G networks are using the SA mode.

- Spectrum is another hurdle that must be overcome. Operators still make most of their revenue from consumers, and do not want to compromise the consumer experience when they start offering network slicing. This means operators need more spectrum. In the U.S., among the three major mobile operators, only T-Mobile currently has a nationwide 5G mid-band spectrum deployment. AT&T and Verizon are currently deploying in mid-band, but that will not be completed until 2023.

- 5G slicing also requires changes to the operator’s business and operational support systems (BSS/OSS). Current BSS/OSS solutions were not designed to support the increased parameters those systems were designed to support.

- And finally, mobile operators still need to create the business propositions around commercial slicing services. Mobile operators need to educate businesses on the benefits of slicing and how slicing supports their different connectivity requirements. This could involve mobile operators developing industry specific partnerships to reach different business segments. All these things take time to be put into place.

Because of the enormity of the tasks needed to make 5G network slicing a commercial success, IDC currently has a very conservative outlook for this service through 2026. IDC believes it will be 2023 until there is general commercial availability of 5G network slicing. The exception is China, which is expected to have some commercial offerings in 2022 as it has the most mature 5G market. Even then, it will take until 2025 before global revenues from slicing exceeds a billion U.S. dollars. In 2026 IDC forecasts slicing revenues will be approximately $3.2 billion. However, over 80% of those revenues will come out of China.

I'll put my hand up:

— Dean Bubley (@disruptivedean) July 14, 2022

When it comes to #5G, I'm an unashamed #SliceDenier

Network slicing is one of the mobile industry's worst strategic errors since IMS.https://t.co/lc7EJ8SgP1 pic.twitter.com/Nz1vvNS9bA

The 'Outspoken Industry Analyst' Dean Bubley believes that Network Slicing is one of the worst strategic errors made by the mobile industry, since the catastrophic choice of IMS for communications applications. In a LinkedIn post he explains:

At best, slicing is an internal toolset that might allow telco operations or product teams (or their vendors) to manage their network resources. For instance, it could be used to separate part of a cell's capacity for FWA, and dynamically adjust that according to demand. It might be used as an "ingredient" to create a higher class of service for enterprise customers, for instance for trucks on a highway, or as part of an "IoT service" sold by MNOs. Public safety users might have an expensive, artisanal "hand-carved" slice which is almost a separate network. Maybe next-gen MVNOs.

(I'm talking proper 3GPP slicing here - not rebranded QoS QCI classes, private APNs, or something that looks like a VLAN, which will probably get marketed as "slices")

But the idea that slicing is itself a *product*, or that application developers or enterprises will "buy a slice" is delusional.

Firstly, slices will be dependent on [good] coverage and network control. A URLLC slice likely won't work reliably indoors, underground, in remote areas, on a train, on a neutral-host network, or while roaming. This has been a basic failure of every differentiated-QoS monetisation concept for many years, and 5G's often-higher frequencies make it worse, not better.

Secondly, there is no mature machinery for buying, selling, testing, supporting. price, monitoring slices. No, the 5G Network Exposure Function won't do it all. I haven't met a Slice salesperson yet, or a Slice-procurement team.

Thirdly, a "local slice" of a national 5G network will run headlong into a battle with the desire for separate private/dedicated local 5G networks, which may well be cheaper and easier. It also won't work well with the enterprise's IT/OT/IP domains, out of the box.

Also there's many challenges getting multi-operator slices, device OS links to slice APIs, slice "boundary controllers" between operators, aligning RAN and core slices, regulatory questionmarks and much more.

There are lots of discussion in the comments section that may be of interest to you, here.

My belief is that we will see lots of interesting use cases with slicing in public networks but it will be difficult to monetise. The best networks will manage to do it to create some plans with guaranteed rates and low latency. It would remain to be see whether they can successfully monetise it well enough.

New 5G Americas Whitepaper: Commercializing 5G Network Slicing - https://t.co/nEDKoTShSQ #Free5Gtraining #3G4G5G #5G #5GNetwork #5GTechnology #NetworkSlicing #5GSlicing #5GS #5GCore #5GStandalone #Orchestration #Automation #NFV #MultiTenancy #Vertical #Horizontal #Slicing pic.twitter.com/juatw3vCwq

— Free 5G Training (@5Gtraining) July 28, 2022

For technical people and newbies, there are lots of Network Slicing resources on this blog (see related posts 👇). Here is another recent video from Mpirical:

Related Posts:

- The 3G4G Blog: 5G Network Slicing for Beginners

- The 3G4G Blog: Network Slicing Tutorials and Other Resources

- The 3G4G Blog: Network Slicing using User Equipment Route Selection Policy (URSP)

- The 3G4G Blog: Network Slicing in NG RAN

- The 3G4G Blog: How to Identify Network Slices in NG RAN

- The 3G4G Blog: QoS Flow Establishments in 5G Standalone RAN and Core

- The 3G4G Blog: 5G Slicing Templates

- The 3G4G Blog: End-to-end Network Slicing in 5G