At 'The Things Conference' in Amsterdam in September, Roman Nemish, Co-Founder & President of TEKTELIC presented a critical view of different IoT technologies and argued that LoRaWAN is the only technology that will eventually make mass IoT possible.

The following is the intro to the talk from the conference:

IoT technology has progressed from home to city-scale applications, making it a crucial part of any operational process. IoT sensors are becoming more affordable, reliable, and easy to deploy.

The Internet of Things has already brought advancement to healthcare, retail, city infrastructure, and manufacturing with many other opportunities still open.

We are ready to explain why IoT deployment has transformed from privilege to necessity, what benefits it can bring to your business, and how you win the competition using IoT.

2 of 2: "The IoT game... is better served by smaller-sized providers without regional licenses offering broadly-equivalent technologies in unlicensed bands" - https://t.co/9QcqoiIRh0

— Robert Horvitz (@open_spectrum) September 30, 2022

Enterprise IoT Insights have a good take on the talk here. The following is an extract:

Nemish, president at TEKTELIC, argued that new-wave cellular IoT – in the form of NB-IoT and LTE-M, primarily – is “too expensive” for consumers and too small-margin for mobile operators; that “most IoT opportunities are 10-25 times smaller [than the kinds of deals that would] attract operator attention”. Cellular IoT has “vast potential”, he concluded, but requires a “different approach”.

In other words, there is not enough profit in (low-power) cellular IoT for mobile operators to give it proper focus – and the deals are not big enough to make them really care. The IoT game – based on finely-calculated returns on volume-deals not going much higher than 100,000 units at a time – is better served by smaller-sized providers, without regional spectrum licences, offering broadly-equivalent technologies in unlicensed bands, he implied.

But experiences with Sigfox and LoRaWAN (in some formats) – the French-born IoT twin-tech that started the whole low-power wide-area (LPWA) movement, and forced the cellular community to come up with their own alternatives – have not been much better, necessarily, the story goes. Sigfox pumped $350 million over 10 years into its technology and network, only to go into receivership at the start of 2022 with fewer than 20 million devices under management.

The problem, said Nemish, is with the business model, and not the tech. (As an aside, a takeaway from The Things Conference last week, as from the LoRaWAN World Expo in Paris in the summer, and from any number of private discussions in between, is the IoT market is mature enough to let go of its closely-held tech differences, and acknowledge that customers don’t really care so long as it works – and so the blame switches to the business model, instead.)

Nemish blamed Sigfox’s ‘failure’ on exclusive single-market contracts and cripping licensing fees; these “killed most operator business plans”, he suggested. Of course, Sigfox lives to see another day – and, it might be noted, Taiwan-based IoT house Unabiz, its new owners, have just hosted the 0GUN Alliance of Sigfox operators in France to bash-out a new operator model, and a collaborative approach to a “unified LPWAN world”.

And LoRaWAN is not exempt in the analysis, either. In Amsterdam, Nemish held up the madly-hyped Helium model for crypto-led community network building as another failed IoT business model. Again – and of course, with a critical appraisal of a LoRaWAN network by a LoRaWAN provider – the tech is not the problem, just the way it is being offered. Because Helium, he said, with $1 billion of public community funding, has “no use” after three years.

As per the slide, parent Nova Labs has “failed to sign customers, implement SLA(s), or plan network evolution”, he suggested. The community behind it, originally bedsit enthusiasts on to a good thing, are not motivated by “IoT adoption but [by] crypto-mania”, said Nemish. Just look on eBay, where 10,000 secondhand Helium miners (gateways) are being flogged, to see how its star has fallen, he said – along with its stock, with HNT trading up 12 percent at around $5 at writing, on the back of a deal for decentralised 5G with T-Mobile in the US, but down from a high of nearly $30 a few months ago.

Here's a belated apology from seasoned tech reporter @gigastacey, an early believer and promoter of @Helium.

— Liron Shapira (@liron) October 2, 2022

Stacey has made $20,000 from a hotspot that served... $0.05 of IoT data.

Even smart folks have trouble seeing through Web3 Ponzis. pic.twitter.com/TXYvA4dIi0

The article highlights some heated discussions on the presentation and slides. You can read the whole article here.

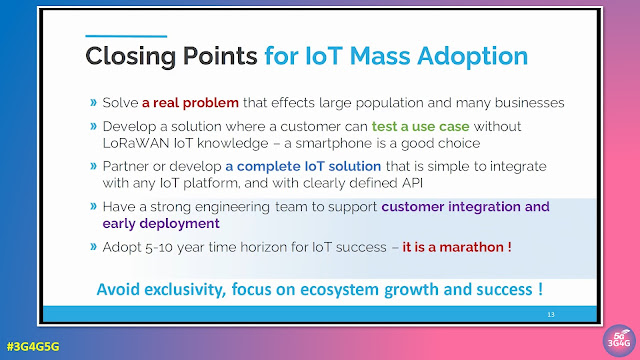

The closing slide nicely summarises that IoT deployment is a marathon, not a sprint. End users are interested in solving real-world problems. Partner to develop complete IoT solutions that can be integrated simply with any IoT platform and with clearly defined API. Also have a strong engineering team to support customer integration and early deployment.

Here is the video of the talk for anyone interested:

Related Posts:

- The 3G4G Blog: Are there 50 Billion IoT Devices yet?

- The 3G4G Blog: Edge Computing Tutorial from Transforma Insights

- 3G4G: Internet of Things (IoT) and Machine-2-Machine (M2M)

- Connectivity Technology Blog: The Wide Variety of Internet of Things (IoT) Technologies for Different Situations