One way all operators in a country/region/geographic area differentiate amongst themselves is by the reach of their network. It's not in their interest to allow national roaming. Occasionally a regulator may force them to allow this, especially in rural or remote areas. Another reason why operators may choose to allow roaming is to reduce their network deployment costs.

In case of disasters or emergencies, if an operator's infrastructure goes down, the subscribers of that network can still access other networks for emergencies but not for normal services. This can cause issues as some people may not be able to communicate with friends/family/work.

A recent example of this kind of outage was in Japan, when the KDDI network failed. Some 39 million users were affected and many of them couldn't even do emergency calls. If Disaster Roaming was enabled, this kind of situation wouldn't occur.

South Korea already has a proprietary disaster roaming system in operation since 2020, as can be seen in the video above. This automatic disaster roaming is only available for 4G and 5G.

I've been waiting for this for many years. https://t.co/Wcl34M1aZy Disaster roaming, now part of 3gpp rel17. https://t.co/F4LAyIp7VE

— Silke Holtmanns (@SHoltmanns) October 2, 2022

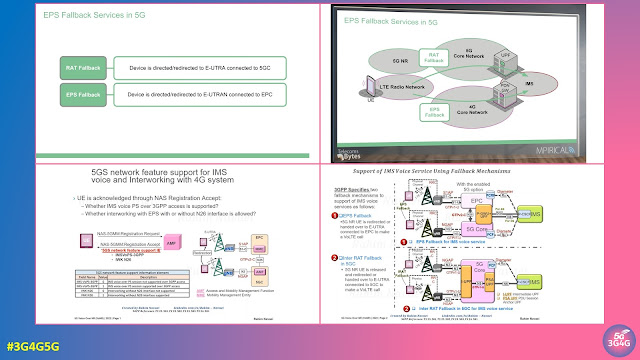

In 3GPP Release-17, Disaster Roaming has been specified for LTE and 5G NR. In case of LTE, the information is sent in SIB Type 30 while in 5G it is in SIB Type 15.

3GPP TS 23.501 section 5.40 provides summary of all the other information needed for disaster roaming. Quoting from that:

Subject to operator policy and national/regional regulations, 5GS provides Disaster Roaming service (e.g. voice call and data service) for the UEs from PLMN(s) with Disaster Condition. The UE shall attempt Disaster Roaming only if:

- there is no available PLMN which is allowable (see TS 23.122 [17]);

- the UE is not in RM-REGISTERED and CM-CONNECTED state over non-3GPP access connected to 5GCN;

- the UE cannot get service over non-3GPP access through ePDG;

- the UE supports Disaster Roaming service;

- the UE has been configured by the HPLMN with an indication of whether Disaster roaming is enabled in the UE set to "disaster roaming is enabled in the UE" as specified in clause 5.40.2; and

- a PLMN without Disaster Condition is able to accept Disaster Inbound Roamers from the PLMN with Disaster Condition.

In this Release of the specification, the Disaster Condition only applies to NG-RAN nodes, which means the rest of the network functions except one or more NG-RAN nodes of the PLMN with Disaster Condition can be assumed to be operational.

A UE supporting Disaster Roaming is configured with the following information:

- Optionally, indication of whether disaster roaming is enabled in the UE;

- Optionally, indication of 'applicability of "lists of PLMN(s) to be used in disaster condition" provided by a VPLMN';

- Optionally, list of PLMN(s) to be used in Disaster Condition.

The Activation of Disaster Roaming is performed by the HPLMN by setting the indication of whether Disaster roaming is enabled in the UE to "disaster roaming is enabled in the UE" using the UE Parameters Update Procedure as defined in TS 23.502 [3]. The UE shall only perform disaster roaming if the HPLMN has configured the UE with the indication of whether disaster roaming is enabled in the UE and set the indication to "disaster roaming is enabled in the UE". The UE, registered for Disaster Roaming service, shall deregister from the PLMN providing Disaster Roaming service if the received indication of whether disaster roaming is enabled in the UE is set to "disaster roaming is disabled in the UE".

From my point of view, it makes complete sense to have this enabled for the case when disaster strikes. Earlier this year, local governments in Queensland, Australia were urging the Federal Government to immediately commit to a trial of domestic mobile roaming during emergencies based on the recommendation by the Regional Telecommunications Independent Review Committee. Other countries and regions would be demanding this sooner or later as well. It is in everyone's interest that the operators enable this as soon as possible.

Related Posts:

- The 3G4G Blog: 2G/3G Shutdown may Cost Lives as 4G/5G Voice Roaming is a Mess

- The 3G4G Blog: Transitioning from eCall to NG-eCall and the Legacy Problem

- The 3G4G Blog: Study of Use cases and Communications Involving IoT devices in Emergency Solutions

- The 3G4G Blog: enhanced Public Warning System (ePWS) in 3GPP Release-16

- The 3G4G Blog: ETWS detailed in LTE and UMTS